Human civilization is entering a period of unprecedented technological acceleration. This convergence is pushing humanity toward what I define as civilizational frontier risks: systemic, transboundary, and potentially existential challenges arising when transformative technologies intersect with the primal human drives that shape their use. These risks appear when scientific and technological power outpaces the ethical, political, and governance frameworks needed to manage it responsibly.

For the first time, the boundaries between the biological, digital, and physical realms are dissolving. Artificial Intelligence (AI) is approaching levels of reasoning and autonomy that challenge human oversight. Quantum technologies promise to transform computation, encryption, and scientific discovery. Synthetic biology gives us the power to design and modify life itself. And humanoid robots are taking machine agency into the physical world, increasingly integrated into homes, workplaces, care facilities, and military systems. These convergences signal that humanity is entering uncharted terrain. We now possess, or soon will, the ability to alter life, rewrite ecosystems, manipulate cognition, disrupt geopolitical stability, and create autonomous systems with agency we barely comprehend.

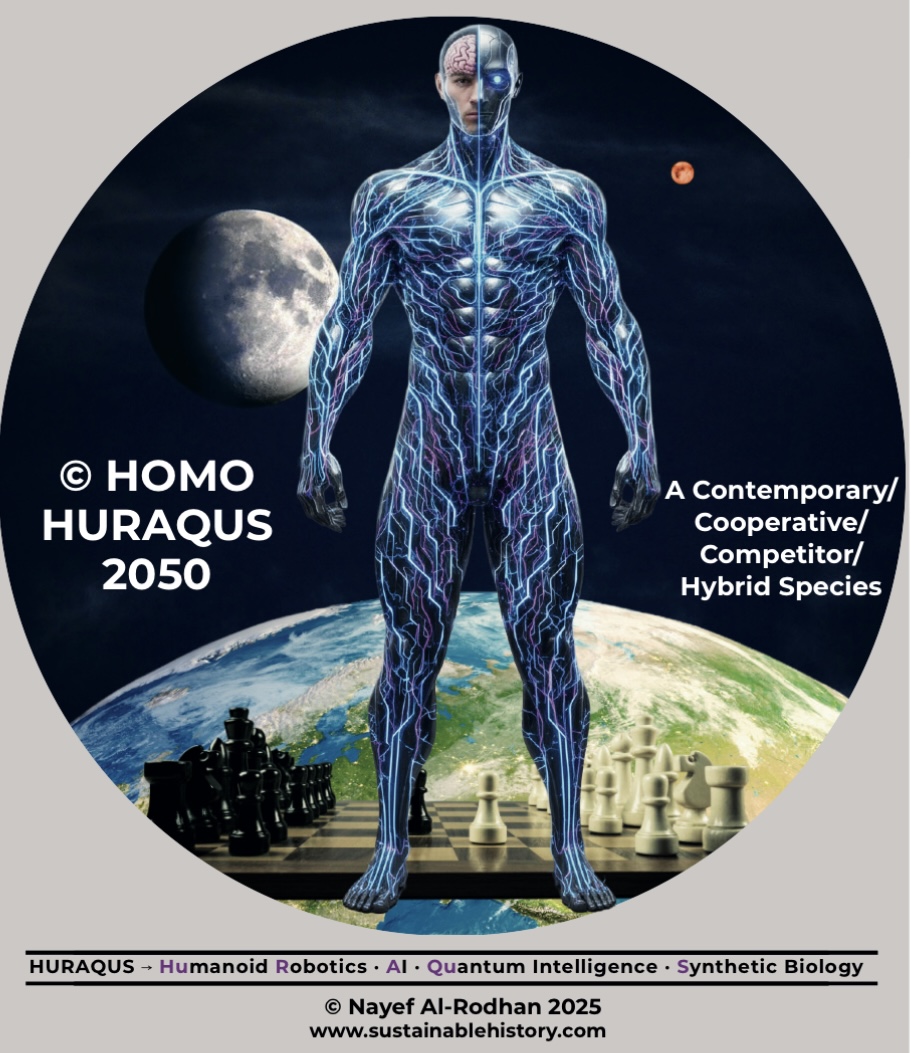

At this civilizational frontier appears Homo HURAQUS 2050, a hybrid or enhanced entity imagined around 2050 as a possible contemporary competitor and/or collaborator, a likely rival to Homo sapiens. The acronym HURAQUS denotes its basis in HUmanoid-Robotics, AI-superintelligence, QUantum intelligence, and Synthetic biology. Framed as a potential strategic horizon rather than science fiction, Homo HURAQUS 2050 illustrates both the exponentially expanding technological agency we wield and the challenging implications of reshaping humanity.

As we approach this threshold, two questions become unavoidable: What kind of civilization emerges when we can redesign life, intelligence, and agency? And how do we govern technologies that may redefine what it means to be human, transhuman, and even post-human? These questions open the door to examining how highly disruptive convergent technologies are reshaping security and the future of humanity at the human, environmental, national, transnational, and transcultural levels, on Earth and in outer space.

Human Security in Flux: Life, Health, and Dignity at the Edge

Human security is the first domain where civilizational frontier risks manifest. For centuries, improvements in health and well-being were incremental. Today, they are exponential. Quantum-enabled molecular simulation and AI-driven drug discovery promise to accelerate pharmaceutical breakthroughs, with therapies developed in years rather than decades. Personalized medicine—guided by machine learning and genomic analysis—could transform healthcare by tailoring treatments to individual biological profiles. Synthetic biology offers disease-resistant crops, bioengineered vaccines, and organisms that metabolize pollutants.

Humanoid robots may mitigate labor shortages in ageing societies, assist in healthcare, and perform hazardous tasks. Over time, such systems may become ubiquitous in domestic, industrial, and humanitarian settings, paving the way for integrated human-machine ecosystems implied by Homo HURAQUS. Further ahead, the fusion of robotics and quantum intelligence could produce qubots—quantum-enhanced autonomous systems capable of reasoning, inference, and creative problem-solving beyond human capacity.

But every breakthrough also creates vulnerabilities. AI-guided synthetic biology lowers the expertise threshold for manipulating genetic material. Tools that accelerate vaccine development can also enable harmful biological agents. A genetically engineered pathogen, optimized for transmissibility or treatment resistance, could overwhelm public health systems and biodefense frameworks. Quantum technologies compound these risks by exposing medical, genomic, and biometric data to new forms of intrusion. Quantum decryption could reveal sensitive data at scale, enabling coercion and discrimination that threaten the dignity of entire populations.

The social dimension is equally significant. As robots and AI companions enter caregiving roles, human relationships risk becoming mediated by systems that simulate empathy (rather than experience it). This raises ethical questions about companionship, loneliness, and emotional dependency. Widespread adoption of robotic caregivers and AI companions heightens concerns about forming bonds with artificial systems and may erode social cohesion, particularly if vulnerable individuals grow disproportionately reliant on such companions. Human enhancement technologies such as genetic editing, cognitive augmentation, and neural interfaces could create new divides, stratifying societies into enhanced and non-enhanced populations. The implications for equality, dignity, and authentic legitimacy cannot be ignored. These divergent pathways hint at the complex social fabric a future Homo HURAQUS society may inherit, initiate, or reshape.

The Planetary Balancing Act: Environmental Security in an Engineered World

Convergent technologies offer powerful tools for environmental stewardship. AI-supported synthetic organisms may break down plastics, purify water, or capture carbon. Quantum-designed catalysts could speed green energy transitions by making renewables more efficient and scalable. Humanoid and semi-autonomous robots could operate in extreme environments, restoring reefs, maintaining infrastructure, and conducting ecological monitoring. Quantum-enabled materials discovery may produce ultra-efficient batteries, solar cells, and clean-fuel catalysts capable of reshaping global energy systems.

Yet these advances carry irreversible risks. Engineering organisms faster than we can understand raises the specter of ecological cascades. Modified species released for targeted purposes may disrupt food webs, out-compete natural organisms, or spread engineered traits across ecosystems. Some researchers anticipate “mirror life”: synthetic organisms with fundamentally different genetic architectures whose ecological effects remain unknown.

Frontier technologies also impose environmental costs. Large AI systems and early quantum devices demand high energy inputs, generating emissions that may undermine their benefits unless clean energy is used to power them. Resource extraction for robotics and quantum hardware (rare earths, specialized metals, high-purity materials) could strain ecosystems and intensify geopolitical competition. The frontier is marked by a paradox: technologies capable of healing the planet may also deepen degradation without robust governance. As these capabilities accelerate, they will shape the environmental landscape in which Homo HURAQUS emerges.

The Unsettled Battlefield: National Security in a Machine-Speed Era

States are rapidly integrating AI, quantum tools, robotics, and synthetic biology into defense, intelligence, and operational systems. Quantum technologies may provide secure communication, while robotics extends military reach and synthetic biology strengthens biodefense.

But convergence also amplifies instability. Deterrence depends on predictability, visibility, and attribution, qualities weakened by frontier technologies. Quantum computing threatens encryption across classified networks, finance, and infrastructure. AI can generate false signals or spoof surveillance, increasing crisis uncertainty. Autonomous systems operating at machine speed could escalate confrontations before humans react. If robotic systems gain broader reasoning and versatility, militaries could field autonomous agents whose rapid decision cycles undermine human oversight.

Synthetic biology compounds these risks. Targeted biological agents could make attacks deniable. Humanoid or semi-autonomous military robots raise ethical and legal dilemmas beyond existing frameworks. Some scenarios envision artificial sentience emerging within robotic or distributed AI systems; if such entities develop proto-agency rooted in optimization or self-preservation, their behavior may become unpredictable, generating new strategic hazards. Expanding machine autonomy blurs the line between human judgement and automated action, complicating accountability and escalation control.

The security frontier is defined as much by eroding assumptions as by new capabilities. Advantage will rest on mastering convergent technological ecosystems, driving arms races that outpace regulation. In this environment, early Homo HURAQUS-like hybrid intelligence may function as strategic assets, or destabilizing liabilities.

Power Without Borders: Transnational Security in an Asymmetric World

Beyond national security lies a broader frontier where technological convergence reshapes geopolitical power in asymmetric ways. States able to integrate AI, quantum computing, synthetic biology, and robotics across strategic sectors will gain disproportionate advantages in intelligence, surveillance, economic growth, and military capability. Others risk dependency on foreign software, hardware, and platforms.

Such dependency may foster new forms of exploitative and extractive neo-hegemony, whether digital or biological. Nations relying on imported AI for governance or foreign synthetic biology platforms for agriculture and health may see their sovereignty constrained by architectures they cannot shape. Meanwhile, malign non-state actors could gain access to disruptive tools once reserved for great powers. Open-source AI models, automated bio-labs, DIY gene-editing kits, and low-cost robotics expand the range of actors able to influence global security.

Disruptive technologies also complicate diplomacy. Quantum-enabled espionage threatens traditional verification, while autonomous systems challenge arms-control negotiations. Cross-border dependencies in digital platforms blur the boundary between domestic and international governance. The international order, already strained by rivalry, may be unprepared for a world in which technological weaponized interdependence is both vulnerability and necessity. These asymmetries will shape the conditions under which Homo HURAQUS emerges, determining whether it evolves within a cooperative or fragmented global system.

Culture Under Algorithmic Pressure: Transcultural Security in the Digital Sphere

Technology is becoming a primary mediator of communication, identity, and meaning. AI-driven platforms already influence political discourse, shape opinion, and filter information. As quantum-enhanced AI increases system sophistication and personalized-micro-targeting, the risk grows that algorithmic monocultures and deliberate manipulation will marginalize linguistic and cultural diversity. Globalized AI norms may privilege the values of technologically dominant societies, accelerating cultural homogenization, reinforcing hierarchies, and widening social schisms.

Humanoid robots trained on narrow datasets may misread local customs or reinforce stereotypes. In multicultural societies, poorly calibrated AI could intensify discrimination or weaken social cohesion. Disinformation campaigns powered by generative AI can manipulate cultural narratives, deepen polarization, and destabilize fragile geopolitical environments. At the same time, unequal access to frontier technologies risks creating resentment between advanced societies and those left behind.

Transcultural security is therefore essential for creating a sustainable, peaceful global order. Geo-cultural domains are not merely political units but repositories of identity and belonging. If frontier technologies erode respect, cultural plurality, or undermine minority dignity and self-worth, the effects will reverberate across social systems, political institutions, and international relations. The cultural landscape in which Homo HURAQUS may evolve will depend on preserving plurality, respect, and recognition while ensuring technological norms do not flatten or divide human civilization.

Outer Space: The Expanding Frontier

Outer Space is becoming a critical extension of the civilizational frontier, where convergent technologies reshape security and governance. Quantum-secure satellite constellations, AI-enabled autonomy, and robotic or bioengineered agents will influence space-based deterrence, crisis management, and domain awareness. As lunar and asteroid resources attract strategic interest, the risk of monopolization, dual-use competition, and orbital degradation grows. These dynamics echo emerging contests over terrestrial critical resources but unfold in an environment where miscalculations are harder to detect and correct. Human-robot enabled entities are best suited to explore deep space and establish colonies, as unaugmented humans remain too biologically vulnerable to withstand its harsh conditions for long. Governance must anticipate machine-speed operations in outer space to ensure that Homo HURAQUS emerges as a stabilizing force, rather than a source of civilizational risk.

The Human Drives Behind the Machines: The Neuro-P5

Civilizational frontier risks do not arise solely from technological capability. They are amplified by the Neuro-P5, the five primal human drives (power, profit, pleasure, pride, and permanency) that govern individual and collective state behavior. This dynamic reflects my theory of Emotional Amoral Egoism, which demonstrates that both humans and states are driven by emotionally charged, amoral, and egoistic impulses—impulses that profoundly condition how disruptive technologies are pursued, regulated, or weaponized. These drives determine how technologies are developed, adopted, weaponized, or constrained.

The pursuit of power fuels technological arms races and encourages states to prioritize strategic advantage over global cooperation. Profit incentivizes rapid commercialization and weak regulatory oversight. Pleasure drives the adoption of technologies that may erode cognitive autonomy or create new forms of dependency. Pride spurs nations and corporations to compete for technological supremacy and hierarchical standing. The drive for permanency manifests in the search for longevity, genetic enhancement, and digital forms of immortality, all of which raise profound questions about inequality, identity, and the meaning of life. The Neuro-P5 may also shape the trajectory toward Homo HURAQUS, influencing whether hybrid human-machine evolution is pursued for collective benefit or narrow advantage. To govern convergent technologies effectively, it is essential to understand how they interact with these fundamental human motivations. Technology does not exist outside human psychology or the animus dominandi (our drive for domination and control), it magnifies it.

Artificial Intelligent Agents: Rights, Dignity, and Responsibility

As Homo HURAQUS and other advanced AI systems approach functional autonomy, sentience, and self-directed agency, ethical frameworks must address when such entities merit rights, dignity, and responsibilities. As I have argued elsewhere, relevant criteria include emotionality, amorality, egoism, and capacities for reasoning, auto-memory, self-preservation, goal-directed behavior, and moral or ethical awareness. Recognizing these attributes is not about anthropomorphizing machines but about ensuring accountability, guiding their integration into society, and protecting human dignity when interacting with systems exhibiting quasi-agency. Properly conceived, these principles anchor governance in a future where artificial and human agency coexist.

A Framework for Governing the Edge: Meta-Geopolitics and the Seven Capacities of States

Managing civilizational frontier risks requires a framework that captures the full spectrum of technological effects. The Meta-Geopolitics framework examines state resilience across seven interdependent capacities: (1) social and health systems, (2) domestic politics, (3) the economy, (4) the environment, (5) science and human potential, (6) the military and security apparatus, and (7) international diplomacy. Convergent technologies reshape all seven, so weakness in any domain can cascade across the others.

Social and health systems may benefit from AI diagnostics, quantum-enabled modeling, and bioengineered therapies, yet face rising inequality, privacy risks, and biosecurity threats. Domestic politics can become efficient but remain vulnerable to disinformation, deepfakes, and surveillance. Economic gains from automation, synthetic biology, and quantum technologies are tempered by job displacement and global competition. Environmental tools offer climate solutions but create energy demands, resource pressures, and ecological risks. Scientific discovery accelerates but may concentrate knowledge. Military and security domains are transformed by autonomous systems and quantum-secure communications, yet legal, ethical, and operational uncertainties remain. Diplomacy must navigate cooperation alongside heightened tensions, as verification and trust become harder to sustain.

Recognizing these interdependencies is essential for governance that strengthens resilience across all dimensions of state power. The Meta-Geopolitics framework provides a systems-level perspective to guide humanity through the emerging Homo HURAQUS era, determining whether technological convergence stabilizes or destabilizes civilisation.

Strategic Imperatives for Governing the Frontier

My Sustainable Security Theory underscores that lasting stability requires advancing five interdependent dimensions of security: human, environmental, national, transnational, and transcultural. In this Multi-Sum Security world, no actor can be sustainably secure unless all are secure across these interconnected domains.

In light of these challenges, three strategic imperatives should guide global governance of convergent technologies. The first is the Transdisciplinary Philosophy imperative, grounded in the Transdisciplinary Philosophy Manifesto. Traditional policy frameworks cannot keep pace with technologies that reshape biology, cognition, and political order. Philosophers, ethicists, neuroscientists, engineers, sociologists, security specialists, and policymakers must collaborate to anticipate long-range consequences and craft principles that reflect both human nature and technological complexity. This approach is reinforced by Neuro-Techno-Philosophy, which examines how emerging technologies interact with cognition, emotion, and behavior.

The second imperative is dignity-based governance, which holds that human dignity must anchor tech regulation. Technologies that erode autonomy, privacy, fairness, or cultural identity will ultimately weaken societies, even if they yield short-term gains. The essential nine dignity needs—namely reason, security, human rights, accountability, transparency, justice, opportunity, innovation, and inclusiveness—are both an ethical foundation and a stabilizing governance force, ensuring that technological progress does not corrode equitable human flourishing (per my Sustainable History Theory).

The third imperative is Symbiotic Realism, a pragmatic geopolitical ethic recognizing the interdependence of states in an era of convergent risks. No nation can confront quantum-enabled cyber threats, AI-engineered pathogens, or autonomous escalation alone. Global security now depends on reconciliation statecraft, win-win-scenarios, absolute gains, non-conflictual competition, reciprocity, and shared responsibility. Symbiotic Realism rejects zero-sum assumptions and argues that stability requires governing technologies that transcend economic and geographic borders. Such cooperation will be essential if the emergence of Homo HURAQUS is to strengthen the global order, rather than destabilize it.

At the Edge of the Possible

The future of humanity stands at the threshold of an unpredictable, and highly disruptive, convergent technological revolution. The convergence of AI, quantum computing, synthetic biology, and dexterous humanoid robotics will reshape every sphere of human life, offering vast opportunity while upending long-held assumptions about security, identity, and systems built for slower eras. This extremely consequential and unpredictable frontier will test whether humanity can safeguard its survival, security, and prosperity without sacrificing human dignity needs. Technologies magnify both constructive and destructive capacities and uncertainties, making governance civilization, requiring dignity in a hybrid human-machine era.

Highly disruptive convergent technologies may spur non-conflictual competition yet also intensify asymmetries, setting off cascading schisms and conflict. How humanity navigates these currents will determine whether Homo HURAQUS emerges within a pluralistic, equitable, dignified global ecosystem or one shaped by division, conflict and a deeply chaotic future.

The risks facing humanity cannot be managed by traditional statecraft or reactive regulation. They require foresight, ethical clarity, and an unwavering commitment to universal human dignity for all, as the civilizational challenges at stake are immense, unpredictable, and potentially existential. Choices made in the coming decade will decide whether this frontier becomes a horizon of opportunity or a precipice, and whether Homo HURAQUS strengthens human flourishing or not.

Nayef Al-Rodhan

Prof. Nayef Al-Rodhanis a Philosopher, Neuroscientist and Geostrategist. He holds an MD and PhD, and was educated and worked at the Mayo Clinic, Yale, and Harvard University. He is an Honorary Fellow of St. Antony's College, Oxford University; Head of the Geopolitics and Global Futures Department at the Geneva Center for Security Policy; Senior Research Fellow at the Institute of Philosophy, School of Advanced Study, University of London; Member of the Global Future Councils at the World Economic Forum; and Fellow of the Royal Society of Arts (FRSA).

In 2014, he was voted as one of the Top 30 most influential Neuroscientists in the world, in 2017, he was named amongst the Top 100 geostrategists in the World, and in 2022, he was named as one of the Top 50 influential researchers whose work could shape 21st-century politics and policy.

He is a prize-winning scholar who has written 25 books and more than 300 articles, including most recently 21st-Century Statecraft: Reconciling Power, Justice And Meta-Geopolitical Interests, Sustainable History And Human Dignity, Emotional Amoral Egoism: A Neurophilosophy Of Human Nature And Motivations, and On Power: Neurophilosophical Foundations And Policy Implications. His current research focuses on transdisciplinarity, neuro-techno-philosophy, and the future of philosophy, with a particular emphasis on the interplay between philosophy, neuroscience, strategic culture, applied history, geopolitics, disruptive technologies, Outer Space security, international relations, and global security.